This Day in UMS: Twentieth Annual UMS May Festival

Editor’s Note: “This day in UMS History” is an occasional series of vignettes drawn from UMS’s historical archive. If you have a personal story or particular memory from attending the performance featured here, we’d love to hear from you in the comments.

May 14-17, 1913: Twentieth Annual UMS May Festival

Featuring five concerts over five evenings, the Chicago Symphony Orchestra, UMS Choral Union, and the Children’s Choir were featured on the Twentieth Annual May Festival, the opening series of concerts at Hill Auditorium. The opening concert featured works by Wagner, Beethoven, A.A. Stanley, and Brahms.

Hill Auditorium celebrated its one hundredth birthday on May 14, 2013. If you’d like to learn more about our history with the storied venue, watch our documentary A Space for Music, A Seat for Everyone.

Interviews with Uncommon Virtuosos: Robert Alexander

Photo: Robert Alexander. Photo by Kärt Tomberg.

Robert Alexander is a sonification specialist working with the Solar and Heliospheric Research Group at the University of Michigan, where he is pursuing a PhD in Design Science. He is also collaborating with scientists at NASA Goddard, and using sonification to investigate the sun and the solar winds.

We met Robert this fall and got to talking about “uncommon virtuosos” – those who master an instrument or genre in unexpected ways, whether Colin Stetson on the baritone saxophone this January or Chris Thile on the mandolin last October.

We sat down with Robert to talk to him about the nuts and bolts of sonification, his new work in sonifying solar wind and DNA, and whether he’d consider the sounds of the sun improvisation.

Nisreen Salka: How did you get into sonification?

Robert Alexander: My background is in music composition and interface design as the focus of my undergraduate and master’s studies, and I transitioned more into using those disciplines towards scientific investigations more recently. I came to work with sonification specifically when I was approached by Thomas Zurbuchen, who is the leader of Solar Heliospheric Research Group (SHRG), and they had this crazy idea of hey, let’s take our data, turn it into sound, and see if we can learn anything new.

NS: Could you describe sonification for the laymen among us? How does that relate to your previous experience in music composition?

RA: Sonification is the use of non-speech audio to convey information. And the idea is that rather than visualizing data and creating a graph or something of that nature, we can instead create an auditory graph. So if we have something like a data value that’s constantly rising, rather than displaying that as a line that constantly goes up, we can have a sound that constantly goes up in pitch.

NS: In your mini documentary about the project with Solar and Heliospheric Research Group (SHRG), you talk about including a drum beat and an alto voice to better personify the satellite data. How did you figure out which musical elements would work?

RA: Well, it’s a process where I first begin by getting as close to the data and the underlying science as possible because I want to let the data to speak for itself, and try to find the natural voice of the data. Once I get a sense for what the data are actually saying and what the data parameters are actually telling me, then I can start to think a little bit about, okay, how could this unfold musically?

And that’s largely a process of trying to work with informed intuition. I’m trying to think about what sort of knowledge the listener is going to bring to the listening process, and I try to work with sounds that might be intuitive.

NS: Sonification seems like a medium for improvisation, but there’s a lot of structural overlay. Do you, as a composer, consider your work improvisational?

RA: I think there’s very much an improvisational element, and you’re going to find that in scientific research even though you may expect it less. We’re talking about an interaction in which we feel so connected with our instruments that we are able to go from an idea to an expression of that idea in a very short amount of time. And one of my goals has been to take this kind of immediacy and try to infuse the sciences with that immediate feedback.

NS: It sounds like sonification really a combination of two disciplines. How did this fusion happen for you? What about this process attracts you both as a scientist and an artist?

RA: As a composer, I’ve always used music to explore my own psyche and the human condition as a form of self-expression. I think the largest shift that took place in my thinking as a composer was this kind of shift from thinking about music and asking, “What do I want to express?” to instead thinking, “How could I use these compositional tools to allow something like the sun to express itself?” I saw this as an opportunity to take something I really love and channel that through a rich learning process.

When science or music happen at a very high level, they are both infused with creativity. And I think the idea of that separation between art and science is more of a modern invention. When I think about these two disciplines, I tend to think about the things that bring them together. A lot of science has sprung forward through different types of intuitive leaps, and I think that it’s this place of intuition that plays into the process of being a creative professional or of being a scientific researcher. That’s the core of a lot of the work that I do.

NS: How do you think people receive what you do? What is their perception when they see the final result?

RA: I think many people initially think, well that is “cool.” But for me, the question isn’t, “How do we make something cool?” The question is “How do we learn something new?” In the moment when we are able to uncover something in a data set with our ears, it’s still cool. But to actually learn something, then it also becomes powerful.

In all of my work, I try to pair the music and the data such that I’m providing knowledge as to how the musical form is drawn directly from the underlying data set. I like to encourage the listener then to simultaneously absorb the music and to investigate the underlying science because that’s when sonification really becomes powerful, when it can fuel the learning process. It takes the abstract and the esoteric and makes it more approachable.

NS: We know that one of your newer projects is the sonification of DNA. How does that relate to your previous work? What about it is new and what about it is similar to what you have done in the past?

RA: This project was an opportunity to take everything I have learned in the space sciences and to apply that to a new discipline in which I had limited prior knowledge. Just like working with solar wind data, we were working with incredibly large amounts of information. Millions of data points could potentially be passing by the ear over the course of a minute.

We were successful in creating an auditory translation, where you can hear where the transcription process starts and ends, and you can hear the structure of the gene. It was really fascinating to have a sense for how this would unfold temporally rather than just seeing it as a sequence of numbers or letters.

A lot of genetic research has also utilized spectral analysis to analyze repeated elements in DNA. And turns out if we take that raw data and turn it into sound, we can hear those repeated elements. Sonification could potentially allow us to hear some of the subtle details in these structures.

NS: Is there anything else we haven’t covered that you want to add about your projects?

RA: There are still a lot of mysteries when it comes to solar science. And we are looking at sonification as a way to gain new insights. Working at NASA Goddard alongside several research scientists there, we actually uncovered quite a variety of new features with our ears that we’re now investigating through traditional research methods.

And so for me, it’s a really exciting time. We came into the project wondering if we’d find something new, and now we’re at the point where I can say that we have been successful in uncovering new science through the process of data sonification, and we’re continuing to discover new things.

Learn more about Robert’s work here. Questions or thoughts? Leave them in the comments below.

This Day in UMS: Enrico Caruso

Editor’s Note: “This day in UMS History” is an occasional series of vignettes drawn from UMS’s historical archive. If you have a personal story or particular memory from attending the performance featured here, we’d love to hear from you in the comments.

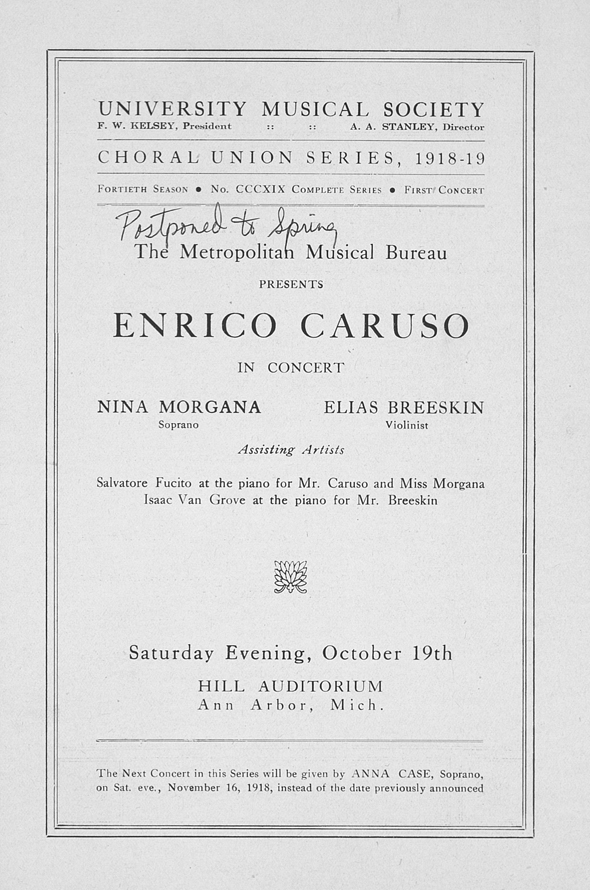

October 19, 1918: Enrico Caruso with Nina Morgana, soprano, and Elias Breeskin, violinist

Photo: (Left) Enrico Caruso with his piano. (Right) UMS concert program.

Near the end of his 25-year career, Italian tenor Enrico Caruso was scheduled to sing selections from several operas during his performance alongside soprano Nina Morgana and violinist Elias Breeskin at Hill Auditorium in October of 1918. The performance was postponed until March of the following year due to the Spanish Flu pandemic.

The first singer to sell over a million copies of his recording, Caruso charmed audiences the world over as the first global media celebrity due to innovations in radio and television technology. His performance at UMS included an extensive repertoire of ten songs, all noted for their difficulty and beauty, including Souvenir de Moscow, Celste Aida, Una Furtiva Lagrima, Vesti La Giubba, and concluding with The Star Spangled Banner.